Run Llama 2 70b On Your Gpu With Exllamav2

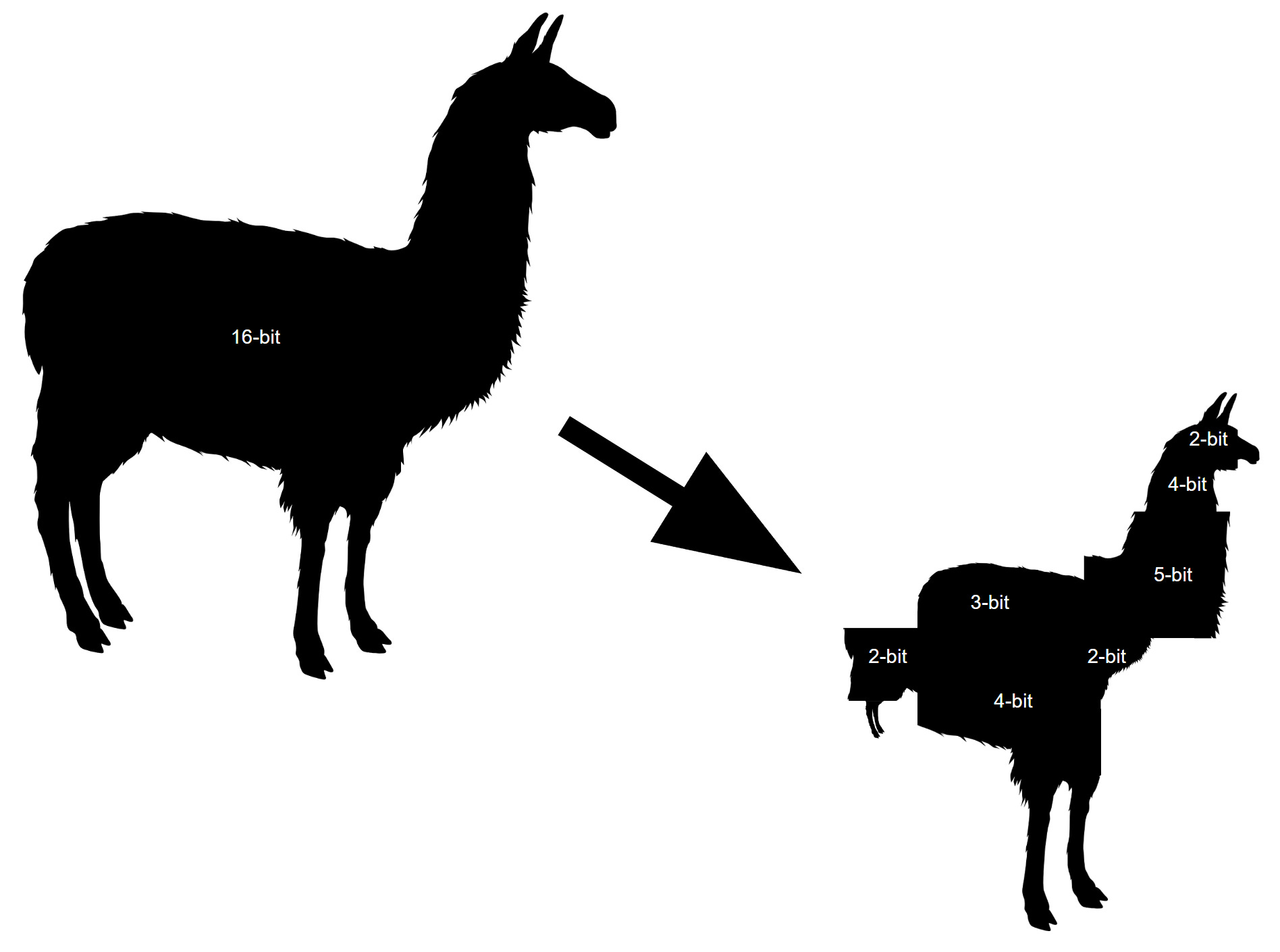

LLaMA-65B and 70B performs optimally when paired with a GPU that has a minimum of 40GB VRAM. If it didnt provide any speed increase I would still be ok with this I have a 24gb 3090 and 24vram32ram 56 Also wanted to know the Minimum CPU. Below are the Llama-2 hardware requirements for 4-bit quantization. Using llamacpp llama-2-13b-chatggmlv3q4_0bin llama-2-13b-chatggmlv3q8_0bin and llama-2-70b-chatggmlv3q4_0bin from TheBloke. 1 Backround I would like to run a 70B LLama 2 instance locally not train just run..

Agreement means the terms and conditions for. Llama 2 is also available under a permissive commercial license whereas Llama 1 was limited to non-commercial use Llama 2 is capable of processing. Metas license for the LLaMa models and code does not meet this standard Specifically it puts restrictions on commercial use for. Quick setup and how-to guide Getting started with Llama Welcome to the getting started guide for Llama. Llama-v2 is open source with a license that authorizes commercial use This is going to change the landscape of the LLM..

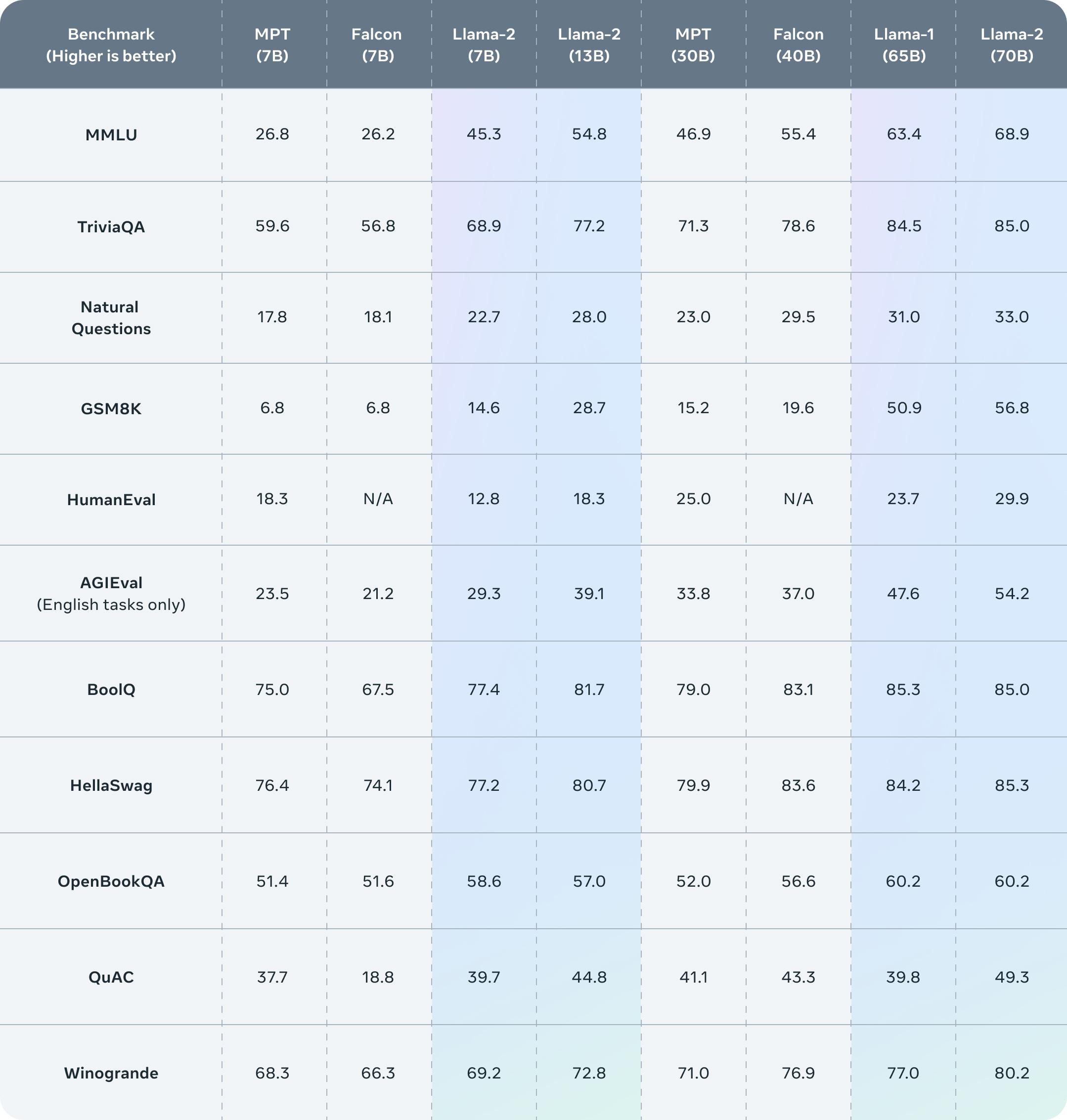

What You Need To Know About Meta S Llama 2 Model Deepgram

. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7. Could not load Llama model from path. Opened this issue on Jul 19 2023 16 comments. I would like to use llama 2 7B locally on my win 11 machine with python I have a conda venv installed. Overview of Llamacpp running on a single Nvidia Jetson board with 16GB RAM from Seeed Studio. Main Code README MIT license llama2-webui Running Llama 2 with gradio web UI on GPU or CPU from anywhere..

The CPU requirement for the GPQT GPU based model is lower that the one that are optimized for CPU. Llama-2-13b-chatggmlv3q4_0bin offloaded 4343 layers to GPU. The performance of an Llama-2 model depends heavily on the hardware. Its likely that you can fine-tune the Llama 2-13B model using LoRA or QLoRA fine-tuning with a single consumer GPU with 24GB of memory and using. Hello Id like to know if 48 56 64 or 92 gb is needed for a cpu setup Supposedly with exllama 48gb is all youd need for 16k Its possible ggml may need more..

تعليقات